Damian Isla approached the area of content creation aided by AI for games by looking at two possible solutions: (1) Michael Mateas’ standpoint of the need for a new breed of engineering competent designers, and (2) Chris Hecker’s standpoint of the need for better authoring paradigms (“The Photoshop of AI”). Damian showed examples of state of the art applications from three categories:

(1) Causal ”when a happens do B”,

(2)Learning (and behavior capture), and

(3)Planning.

For each of the categories he showed screenshots of interfaces illustrating the approaches, among them Endorphin (NaturalMotion), Havoc Behavior, Autodesk, The Restaurant game (Jeff Orkin), AC Knowledge viewer (TruSoft), Assassins Creed (Ubisoft), Halo 3, The Sims, F.E.A.R (Monolith Productions), Final Fantasy 12 (Square Enix),SPIRPOS AI, Zombie (Steve Marotti, Nihilistic Software), Situation Editor (Brian Schwab, Sony), BT Editor Prototype (Alex Champanard), Façade (Mateas & Stern).

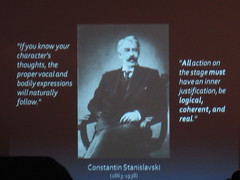

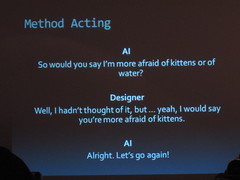

Damian went on quoting Stanislavsky (whereupon I almost fell in love with the speech),

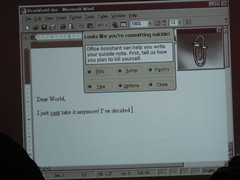

and then showed a mockup of the office assistant helping out with a suicide letter (where I DID fall in love),

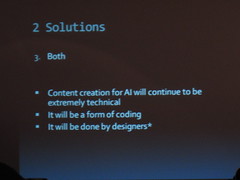

and closed his circle of arguments by looking at the two possible solutions. Damian thinks we need both, and I agree. It’s not new saying that AI needs to be done in coop with designers, but what Damian is saying that is it should be done BY designers – either in code, or by using the technical solutions for content creation. (And we need more of those). I might of course be biased given the work I do… but hey, there is a reason for it. Yay for Damian!

I understood later in the day that I had missed a really good speech in the morning: Tan Le’s presentation about Emotive “The Brain - Revolutionary Interface for Next-Generation Digital Media”. Emotive has developed a helmet that listens to the EEG waves of the brain and managed to make a system that can filter the noise from the signals good enough to enable a player to move 3D objects with pure thought! Still in Orlando after having disembarked the ship I watched a presentation of the system that is out on YouTube. …Would the Marvin, who is up on stage there, be Marvin Minski? I really hope that I can swing some time after I’m done with the dissertation to play around with the system: there is an SDK for it. (Thanks David Gibson for sending me the link and summarizing her whole speech in conversation :))