In the afternoon of the WRPG workshop (see previous post) we sat down to do some prototyping. Anne had brought lots of colorful prototype materials, such as glass tokens and modeling clay.

We started with throwing out ideas for questions to explore. Some of the ones I remember were:

- Use Mark's general proposal for how to first think about a concept, and see how it can be represented as a game mechanic. Then break out said game mechanic, implement it in isolation, and see if it really do represent the concept in question.

- Given the rich body of work in philosophy regarding how we as humans are to act – isn’t it curious that most systems governing agent action selection is based on utility and maximizing the agents own success? What other ways can we use for creating principles for agent-action selections?

- Experiment with constitutive rule systems rather than restrictive ones.

We spent perhaps half an hour brainstorming questions until we formulated what question to work with:

How can we model a non-utilitaristic ethics system?

With non-utlilitaristic* we meant that the agents would be motivated by other things that being motivated by their own success (measured by context and utility).

We decided to have a world where there is stuff. And that agents can own stuff.

We outlined some of the affordances.

It is possible for an agent to want to:

- Have.

- To not have.

- To give

- To take

- To share

We started to outline some principles, such as:

- Everyone should have everything

- Utilitarianism (action to maximise ‘goodness’, no matter to whom the goodness goes to, as long as it is maximized

– according to common or individual view of what goodness is)

- Everyone should have an equal amount of stuff.

Then, we started to put more and more principles and wants onto playing cards in order to use them as constitutive rules. Here are a few of them:

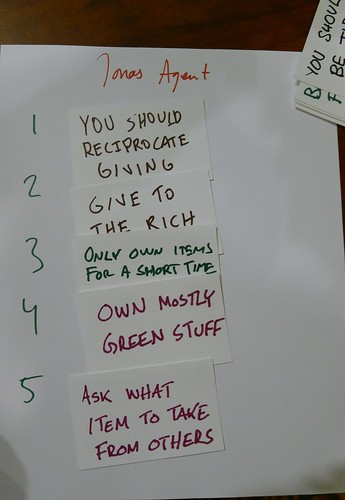

Some of them were principles of ethics, such as “give to the rich” or “everyone should have an equal amount.” Other where more gamey, such as “you only want green stuff”. Other cards we had to discard, because they went outside the affordances of the game. Such as “You should be tidy” was not possible for an agent to do. Green stuff was possible to use because we had green items on the table, and agents could acquire them. …And even though we agreed to not equip our agents with needs and desires outside approaches to ownership of stuff, we made sure to grab some cookies for them in the break.

We divided ourselves so that we had 3 agents, played by Emmett, Anne and Jonas Linderoth. Elina and I formed a player-team, and Jon and Mark formed the other. We decided that each play team could give 3 rule/principle cards to one agent, and two cards to the third agent.

Each play team could instantiate one agent each, and both have impact on the third. The cards were closed teams couldn’t see each others’ cards. Each team could choose from half of the total deck, not knowing what cards were in the other teams half of the deck. Once the agents got their rule cards they could start acting (turn-based).

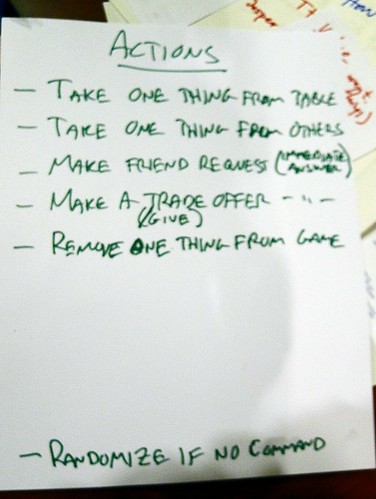

Anne, Emmet and Jonas started acting, and we threw down a list of possible actions:

We were also curious about how Jon’s Designscape method would work in practice, so we tried the method during the workshop. Jon would occasionally ask us about how we rated the different design aspects of our game. Three times during the process we gave a rating between 1 and 6 on how much we trusted certain design aspects. Here are the aspects we rated:

1 Players explore the ethical principles of the agents

2 Building your own ethic system Agent

3 Your agent can interact with others agents

4 Evolution from survival to music taste

5 Your agent can pick up values from other Agents

6 Underlying ethical principles as guidelines

7 The agent interaction format

8 Global rules of interaction

9 Agents that are your own, others and shared

With Jon's tool it would be possible to see a 3 dimensional representation on how we subjectively rate them, with a time progression, something like this. For the second round we had the list of actions, and we also wrote some new cards for rules. We took out some cards that had been difficult for the agents to use. Those were cards that described principles or rules that the agents lacked ways of implement, as they were not afforded by the list of actions or by what things were available on the board. Oh, we also tidied up the table and placed on a board what would be able for the agents to take/give/destroy. For the second round we also ordered the rules for the agents in priority so that they would know how to prioritize among their principles/rules/goals. Here is a picture of Jonas' agent (made by Mark and Jon) in the second round:

There were a couple of fun instances. When Emmet suddenly reached out and took a bite from Jonas’ cookie. (he was destroying an item). Jonas being really happy about it, because he then got another unique item, something he had in one of is cards. Another, Emmet suddenly throwing a bag of blue stones over his shoulder again following his agent's "destroy-item" principle. We laughed a lot.

What I was especially happy, and positively surprised, about was how we almost magically implemented so many of the ideas that we talked about in the beginning of the session, in our initial half-hour of brain storming. We had actions AND action selections being pretty much constitutive given the cards. The agents did express different types of principles, even if it was so limited in terms of affordances. The ownership aspect was well chosen, and it was good that it was done so early in the session, which actually only lasted for three hours. It was also nice with the different decks of cards for the play groups, using some some similar mechanics as Dominion and Fluxx - that is that rules and afforances can change with the composition of the deck or cards in "the hand", or in our case, the principle card our "agents" got as instructions for how to choose to take action. We even implemented someones comment about creating a player "robot", authored by the co-players (that would be the agents).

Shared cognition bonanza. What a great group!

*non-utlilitaristic is,

I see in retrospect, a bit unfortunately named, since one naturally associates to

utilitarianism, where the it is considered good to strive for the maximum

overall goodness rather than maximizing the goodness for self. It was exactly

-ism like that we wanted to think about how to implement. We used the term

because we were thinking of utility -

normally one strives to make agents that maximize their own success given

different parameters. Those parameters can change depending on context, giving

different action choices different values. What we meant with the naming (that

we didn't discuss like this in the workshop) was that we wanted agents that

could make choices that could follow other principles of action that maximizing

value and/or success for individual agent. ...and yes, one could argue that in

that case one would put that as a success criteria for the agent and that would

end up the same - but it is beside the point. Point: agents with different

ethical systems.